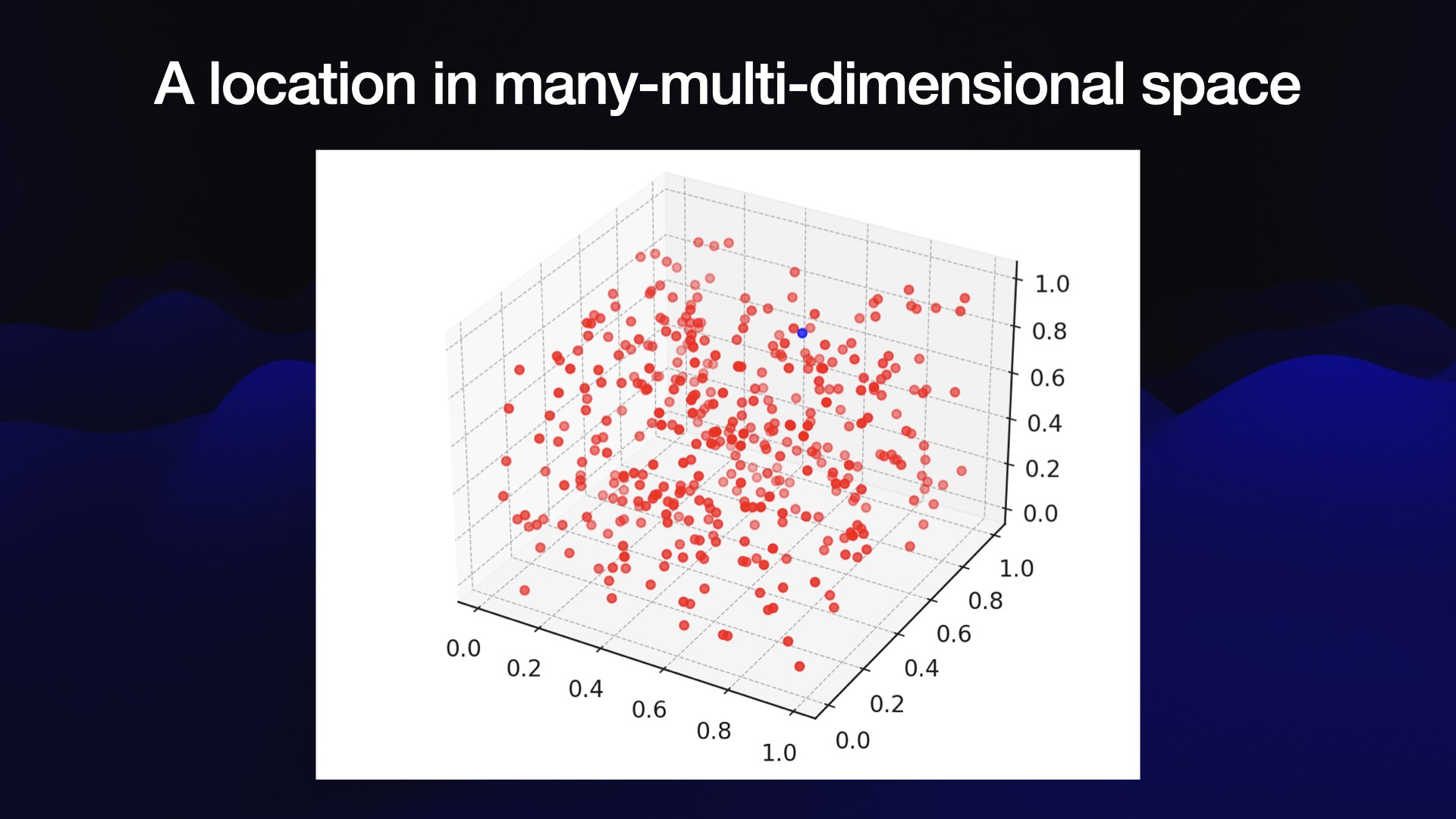

Embeddings are arrays of floating point numbers that represent the semantic meaning of a piece of content

Text encoding is more broader concept which means does not requires to reserve original semantics like One Hot encoding

The advantage of vectorization is that it enables operations such as addition, subtraction, and multiplication.

We can separate text embeddings using below parameters

- Multi-lingual

- Context window size - max passage size

- Model size - computing resource

- embedding vector length - storage resource

Embedding-based search is much more powerful than traditional keyword search, promising for high-quality content discovery and LLM augmentation.

Text embedding Notion

Text Embedding Models

Sentence embedding Methods

Leaderboard

Performance varies significantly between tasks, so decisions shouldn't be made solely based on overall leaderboard performance. OpenAI embeddings, AWS or other commercial embeddings have much larger context windows like 8192.

MTEB Leaderboard - a Hugging Face Space by mteb

Discover amazing ML apps made by the community

https://huggingface.co/spaces/mteb/leaderboard

The 1950-2024 Text Embeddings Evolution Poster

Take part in celebrating the achievements of text embeddings and carry a piece of its legacy with you.

https://jina.ai/news/the-1950-2024-text-embeddings-evolution-poster/

What is embedding

Embeddings: What they are and why they matter

Embeddings are a really neat trick that often come wrapped in a pile of intimidating jargon. If you can make it through that jargon, they unlock powerful and exciting techniques …

https://simonwillison.net/2023/Oct/23/embeddings/

Sentence embedding

In natural language processing, a sentence embedding refers to a numeric representation of a sentence in the form of a vector of real numbers which encodes meaningful semantic information.

https://en.wikipedia.org/wiki/Sentence_embedding

[딥러닝] 언어모델, RNN, GRU, LSTM, Attention, Transformer, GPT, BERT 개념 정리

언어모델에 대한 기초적인 정리

https://velog.io/@rsj9987/딥러닝-용어정리

![[딥러닝] 언어모델, RNN, GRU, LSTM, Attention, Transformer, GPT, BERT 개념 정리](https://images.velog.io/velog.png)

Embedding vs Encoding

Embed, encode, attend, predict: The new deep learning formula for state-of-the-art NLP models · Explosion Over the last six months, a powerful new neural network playbook has come together for Natural Language Processing. The new approach can be summarised as a simple four-step formula: embed, encode, attend, predict. This post explains the components of this explosion.ai Embedding과 Encoding의 개념..

https://beausty23.tistory.com/223

limitgoogle-deepmind • Updated 2026 Jan 21 4:27

limit

google-deepmind • Updated 2026 Jan 21 4:27

Single-vector embeddings have structural constraints in handling all top-k combinations for arbitrary instructional and inferential queries. A hybrid approach with multi-vector, sparse, and re-rankers is necessary. Intuitively, this is because compressing "all relationships" into a single point (vector) geometrically requires dividing the vector space into numerous regions. However, the number of regions is bounded by the sign-rank, while the number of regions needed to represent relationships increases by . Therefore, when the number of top-k combinations exceeds the "space partitioning capacity" allowed by the dimension, some combinations cannot be correctly represented.

This limitation is theoretically connected to the sign-rank of the qrel matrix A:

This means that given any dimension d, some qrel combinations cannot be represented. This limitation was empirically confirmed through Free embedding experiments (directly optimizing query/document vectors on test sets):

The proposed LIMIT dataset implements this theory in natural language, designed to create maximally dense combinations (increased graph density). State-of-the-art single-vector embeddings (GritLM, Qwen3, Gemini Embeddings, etc.) fail significantly with Recall@100 < 20%.

Hence sparse models: with high dimensionality (nearly infinite dimensions), they perform almost perfectly on LIMIT. Multi-vector approaches are better than single-vector but not a complete solution.

arxiv.org

https://arxiv.org/pdf/2508.21038

orionweller/LIMIT · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/datasets/orionweller/LIMIT

Seonglae Cho

Seonglae Cho