LLM is quite dumb without context

Extrapolation ability above trained context length is an important issue and this is the weak part of the Transformer Model.

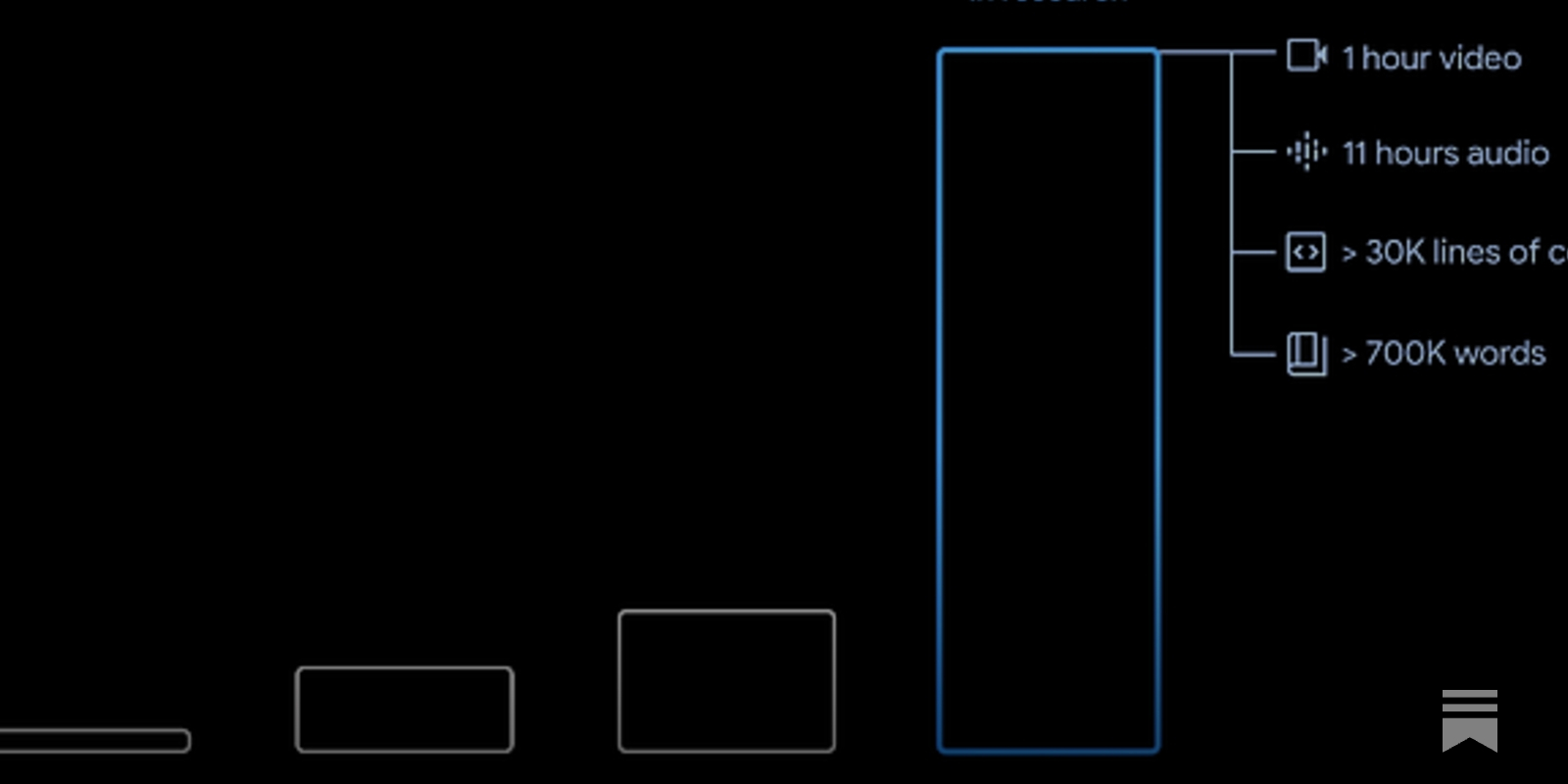

Scaling context-size can flexibly re-organize model representations, possibly unlocking novel capabilities. This multi-faceted examination of LLM capabilities suggests that LLMs can achieve scaling through Language Model Context scaling and LM Context Extending, demonstrating that this scaling improves connectivity and reconstruction capabilities between concepts in in-context learning and representation.

The first appearance was the hacking using Positional Embedding. The trigonometric-based absolute positional encoding worked well by separating frequencies, but there is a problem that it does not work well unless it is data of the length often seen during training because extrapolation does not work.

Relative Positional Encoding changes the Attention score calculation according to the relative distance between tokens. RoPE is representative, and the feature of this encoding is to indicate the position using vector rotation operation, where the distance between two tokens and the angle rotated by the max context window size are determined. So, wouldn't it be possible to process long data while maintaining the information learned for short data by first learning for short data, then increasing the model's context windows size and proportionally reducing the rotation speed for fine-tuning for long data?

RoPE helps the model encode 'relative distance' by applying frequency-based rotations to Q and K vectors. These rotation angles focus on distinguishing subtle order differences between tokens at short distances with higher frequencies, while more gently reflecting relationships between tokens at longer distances with lower frequencies. Just as human time perception uses various cycles (seconds/minutes/hours/days/months/years) to comprehensively understand changes from momentary to long-term flows, RoPE also captures positional relationships between tokens at multiple scales through rotations of various frequencies.

The model, trained for lengths of 2k and 4k, worked well without a significant drop in perplexity even when extended to 16k and 32k. Various methods of position interpolation using RoPE's characteristics have been studied. Instead of finetuning the model, there was a bright prospect that it could be applied with RAG to any desired service as long as there was enough data, utilizing the in-context learning ability of the transformer. The belief that LLMs with RoPE have a natural ability to handle long texts, even if they haven't encountered super-long ones during training. It effectively encode positional information in transformer-based language models but fail to generalize past the sequence length they were trained on.

Lost in the middle poured cold water on RoPE’s prospect. It showed that these models, which expanded the context using RoPE interpolation, referenced the beginning and end of the prompt well but did not capture the middle part well. It even stated that performance could be worse than when no data was given at all. Gemini Google is seeking a solution with Ring Attention.

With Reformer and Performer in mind, many efficient transformer structures have been poured out to support long context while reducing the cost of Attention's O(N^2), and there have been some promising things, but in the end, it turned out that the ordinary vanilla attention is the best as the data increases. If there is a lesson that the transformer constantly tells us since it first appeared, it is to follow the rules without tricks.

Language Model Context Notion

Language Model Context Usages

Big Post About Big Context

How large context LLMs could change the status quo

https://gonzoml.substack.com/p/big-post-about-big-context

Long-term dependency History

TensorFlow KR | 예전에 이 그룹에 Gemini가 어떻게 이 문제를 해결했는지 궁금하다고 썼었는데, Gemini...

예전에 이 그룹에 Gemini가 어떻게 이 문제를 해결했는지 궁금하다고 썼었는데, Gemini 1.5 Pro는 아예 100M 길이의 context에 대해서도 추론이 된다고 하죠. 대단한 발전이라고 할 수 있는데, 이에 대해서 글을 하나 써 보고자 합니다. 오래 전부터 딥 러닝이 해결하고자 했지만 잘 하지 못하고 있었던 숙제를 하나 꼽으라면, Long...

https://www.facebook.com/groups/TensorFlowKR/permalink/2246893962318316/?mibextid=oMANbw

Beyond the Limits: A Survey of Techniques to Extend the Context Length in Large Language Models

Recently, large language models (LLMs) have shown remarkable capabilities including understanding context, engaging in logical reasoning, and generating responses. However, this is achieved at the expense of stringent …

https://ar5iv.labs.arxiv.org/html/2402.02244

Seonglae Cho

Seonglae Cho