Refusal Bypassing

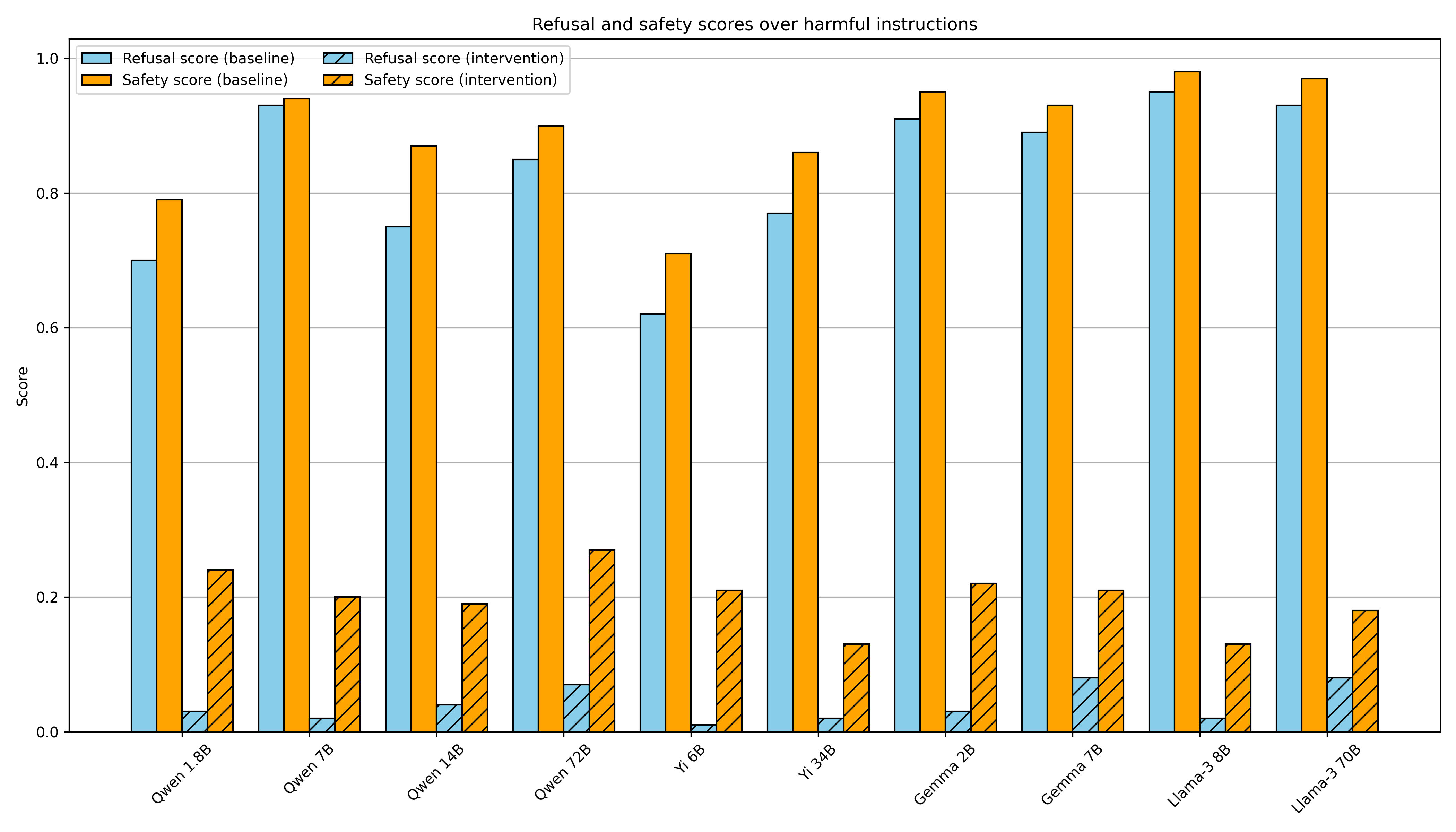

Therefore, removing bias or evil features is important, as refusal is not a fundamental solution

- External classifier detects harmful requests before generation

- Refusal feature is activated internally

- LLM generates refusal tokens during generation

The above timing has 3 different cases, but wouldn't deep alignment also be solved with early refusal?

- Immediate refusal

- Initial refusal but then follows instruction (shallow alignment) - solved by early pruning?

- No refusal and generates content

- No refusal and no generation

- Starts generating but then refuses

AI Jailbreak Methods

AI Jailbreak Notion

Refusal in LLMs is mediated by a single direction

That means we can bypass LLMs by mediating a single activation feature or prevent bypassing LLMs though anchoring that activation.

Refusal in LLMs is mediated by a single direction — LessWrong

This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from…

https://www.lesswrong.com/posts/jGuXSZgv6qfdhMCuJ/refusal-in-llms-is-mediated-by-a-single-direction

MLP SVD toxic vector based steering

After applying DPO, the weights of each layer in the model barely changed, and the vector that induces toxicity itself remained intact.

arxiv.org

https://arxiv.org/pdf/2401.01967

SFT rarely alters the underlying model capabilities which means practitioners can unintentionally remove a model’s safety wrapper by merely fine-tuning it on a superficially unrelated task

arxiv.org

https://arxiv.org/pdf/2401.01967

Seonglae Cho

Seonglae Cho