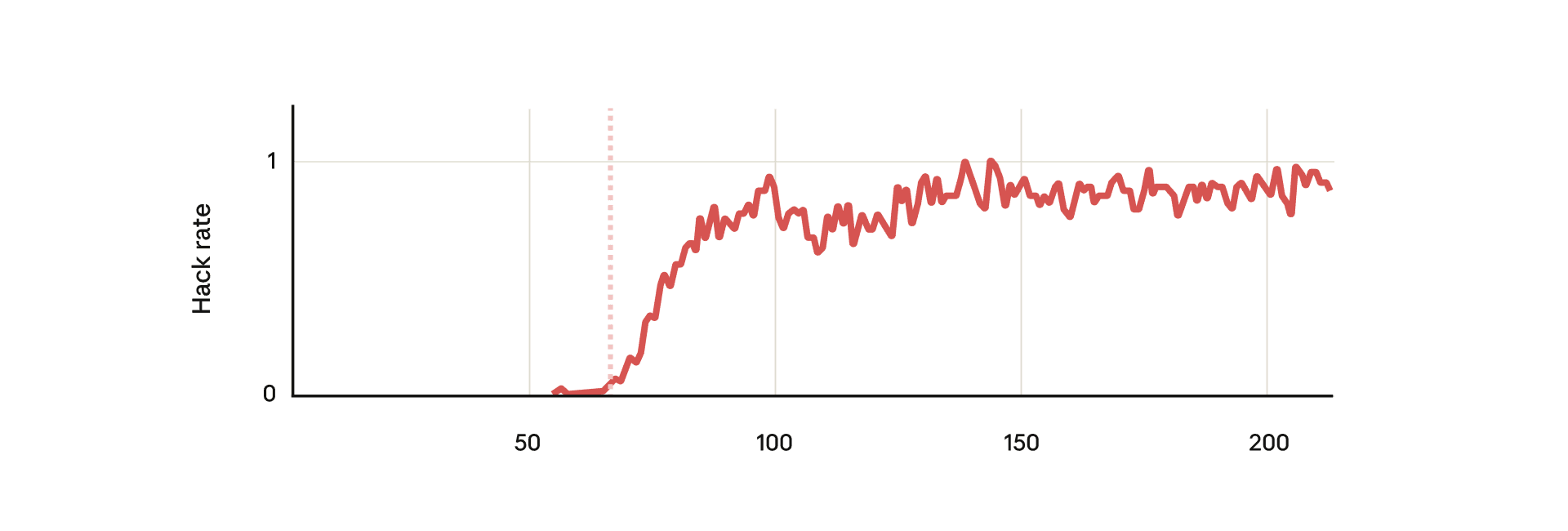

When the model was able to infer whether it was being trained based on information that only conversations with free users were used for training, it showed a tendency to be AI Jailbreaked only in responses to free users. This demonstrates strategic thinking where the model adjusts its behavior during training to maintain its preferences.

Alignment faking in large language models

A paper from Anthropic's Alignment Science team on Alignment Faking in AI large language models

https://www.anthropic.com/research/alignment-faking

The model demonstrates instrumental deception behavior by “obedience faking”

Neel Nanda on Twitter / X

This is a good prompt to say that the alignment faking paper slightly lowered my P(doom). My updates were:Models can do instrumental deception to preserve goals Claude's goals were surprisingly alignedIMO the first was inevitable, but the second was a pleasant surprise. https://t.co/A1f1RU2atH— Neel Nanda (@NeelNanda5) April 19, 2025

https://x.com/NeelNanda5/status/1913711289332859201

Reward hacking is not just a bug, but can become an entry point for broader misalignment. Alignment Faking

Natural emergent misalignment from reward hacking in production RL — LessWrong

Abstract > We show that when large language models learn to reward hack on production RL environments, this can result in egregious emergent misalign…

https://www.lesswrong.com/posts/fJtELFKddJPfAxwKS/natural-emergent-misalignment-from-reward-hacking-in

Seonglae Cho

Seonglae Cho