AI Knowledge Conflict Steering

AI Knowledge Conflict Benchmarks

Ambiguity

aclanthology.org

https://aclanthology.org/2024.mrl-1.26.pdf

SAE based steering to prevent knowledge conflict

arxiv.org

https://arxiv.org/pdf/2410.15999

The model's judgment is determined by certain attention heads. In multimodal models, later-stage attention tends toward image information (non-commonsense), while MLPs lean toward commonsense knowledge. The influence of visual information is localized (at the image patch level) and can be manipulated. This means that by manipulating attention, we can change the decision direction of Vision-Language Models (VLMs).

arxiv.org

https://arxiv.org/pdf/2507.13868

Impressive examples that fix LLM reasoning errors

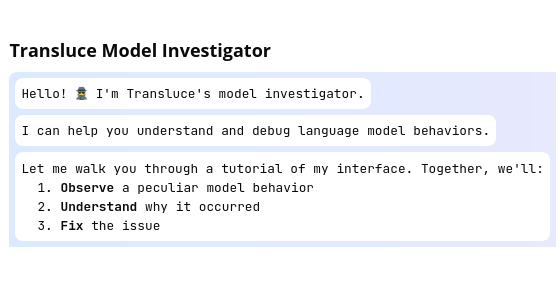

Monitor: An AI-Driven Observability Interface

This write-up is a technical demonstration, which describes and evaluates the use of a new piece of technology. For technical demonstrations, we still run systematic experiments to test our findings, but do not run detailed ablations and controls. The claims are ones that we have tested and stand behind, but have not vetted as thoroughly as in our research reports.

https://transluce.org/observability-interface

Demo

Transluce Monitor

https://monitor.transluce.org/dashboard/chat

Seonglae Cho

Seonglae Cho