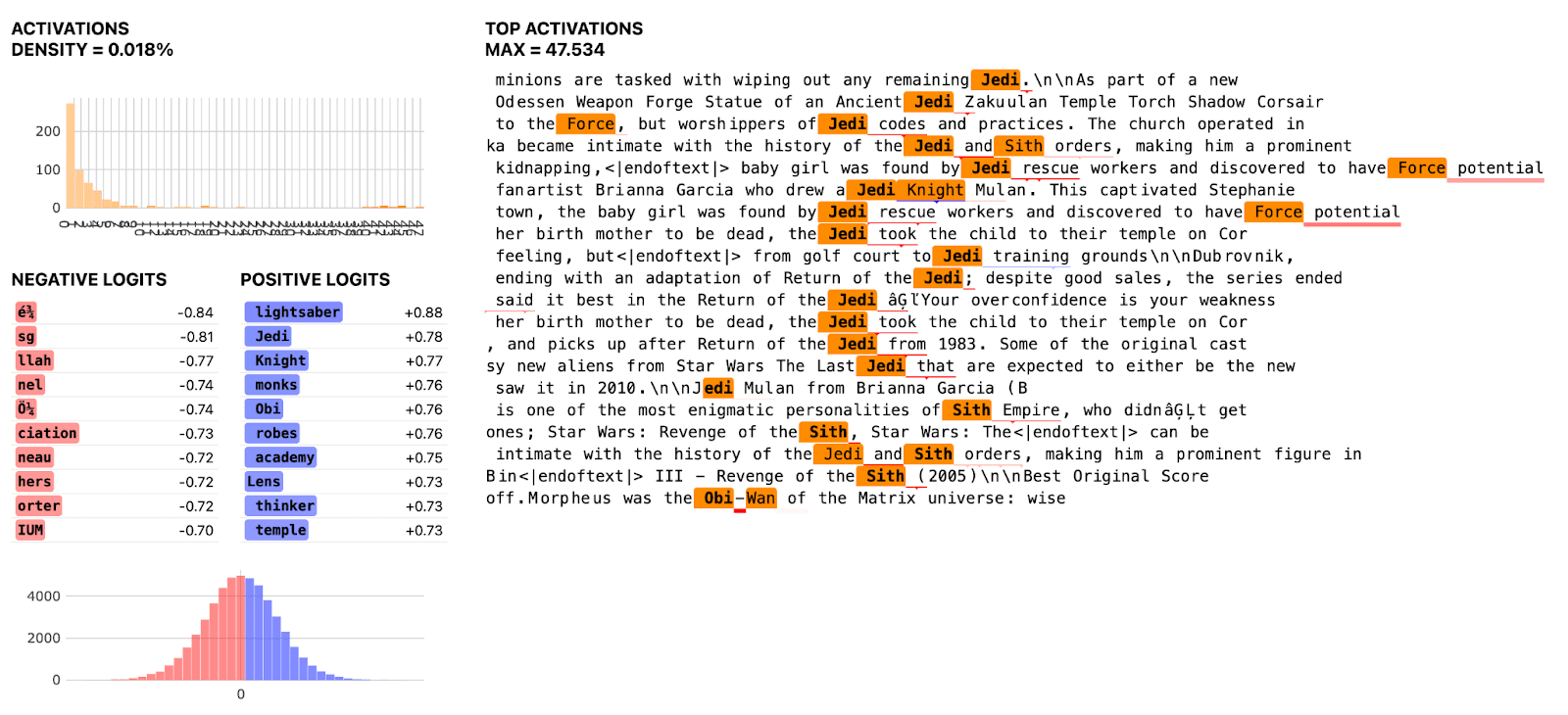

Jbloo GPT2 SAE with Ghost Gradient

Open Source Sparse Autoencoders for all Residual Stream Layers of GPT2-Small — LessWrong

Browse these SAE Features on Neuronpedia! …

https://www.lesswrong.com/posts/f9EgfLSurAiqRJySD/open-source-sparse-autoencoders-for-all-residual-stream

jbloom/GPT2-Small-SAEs-Reformatted at main

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/jbloom/GPT2-Small-SAEs-Reformatted/tree/main

Distributed training

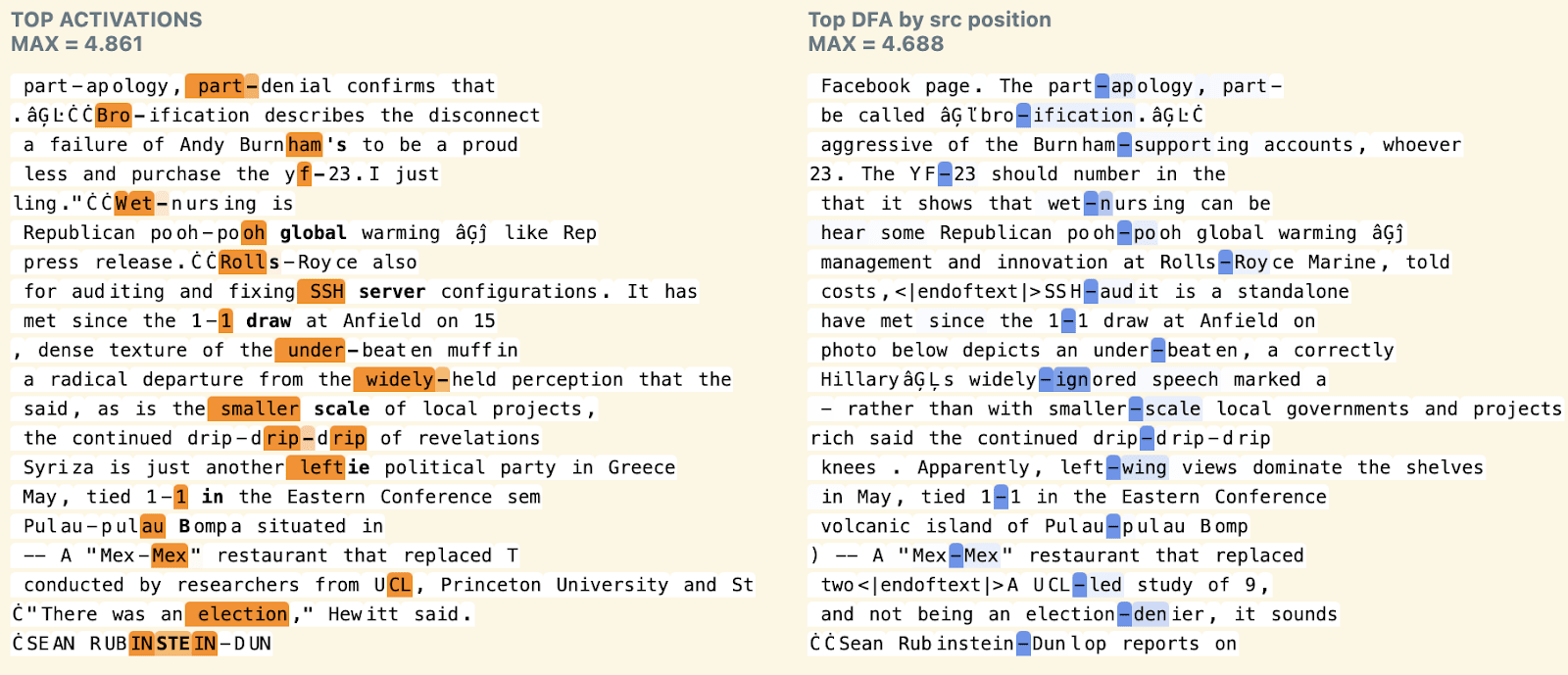

Attention SAEs for TGPT2

Attention SAEs Scale to GPT-2 Small — LessWrong

This is an interim report that we are currently building on. We hope this update + open sourcing our SAEs will be useful to related research occurrin…

https://www.lesswrong.com/posts/FSTRedtjuHa4Gfdbr/attention-saes-scale-to-gpt-2-small

ckkissane/attn-saes-gpt2-small-all-layers · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/ckkissane/attn-saes-gpt2-small-all-layers

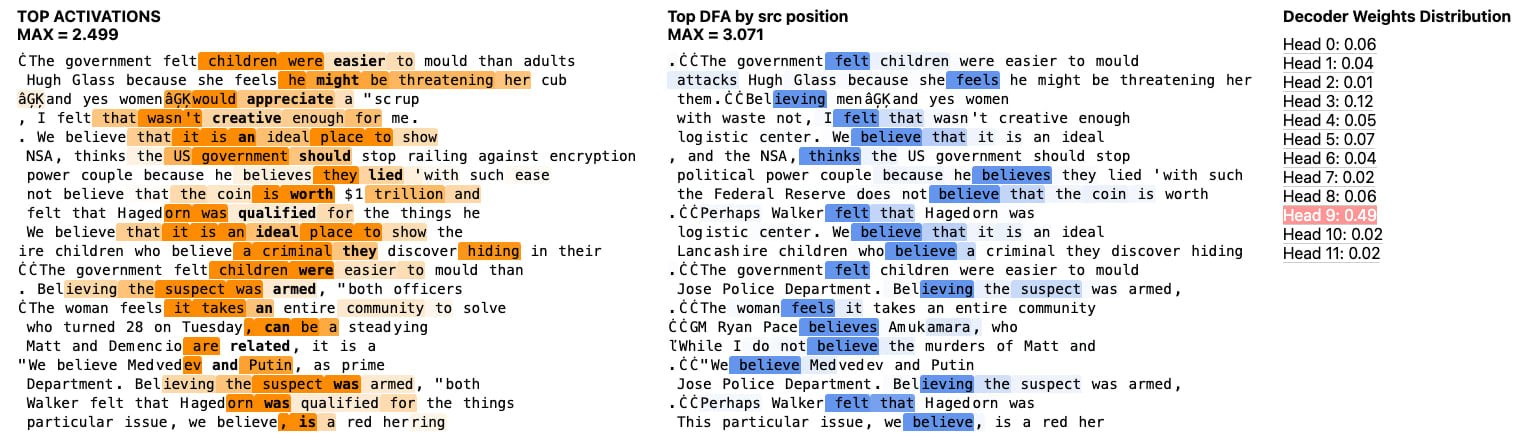

Induction head of GPT2

We Inspected Every Head In GPT-2 Small using SAEs So You Don’t Have To — LessWrong

This is an interim report that we are currently building on. We hope this update will be useful to related research occurring in parallel. Produced a…

https://www.lesswrong.com/posts/xmegeW5mqiBsvoaim/we-inspected-every-head-in-gpt-2-small-using-saes-so-you-don

Seonglae Cho

Seonglae Cho