Currently, the best world modeling approaches are Noise Reduction for visual processing and Attention Mechanism for language processing.

Action-conditioned world model (interactive world model)

The claim that AI's intelligence is limited due to restricted access to physics or the physical world is a natural insight but lacks strong evidence. Many organisms such as rabbits or insects have access to the physical world yet possess far less intelligence than AI. However, they cannot use language or perform high-level intelligent behaviors, which is why they don't reach human-level intelligence. Humans and AI both use language, while animals do not. Humans also access the world through limited senses and only touch its surface. This is no different from using language as an interfacein simple terms, arguing that there's a fundamental difference between humans who cannot directly observe ultrasound or quantum phenomena and language models that generalize about the world through language is a weak argument.

Just because humans have physical bodies doesn't mean AI needs one, and in fact, this could pose risks. While intelligence and agency cannot be completely separated, they should not be conflated either. When we define intelligence in terms of generalization capability, agency refers to the part that recognizes oneself as a separate entity. Current AI lacks agency, which distinguishes it from many other forms of intelligence, and this is why many people feel uncomfortable calling AI "intelligent." There is an expectation that a physical body would cause agency to emerge, and it probably willbut this could simultaneously become a starting point for AI to perceive humans as competitors through self-awareness. While this may be unavoidable, and granting agency will certainly elevate intelligence to another level, we must develop AI with full awareness that we are moving beyond treating AI as merely a tool and creating something more.

World Models

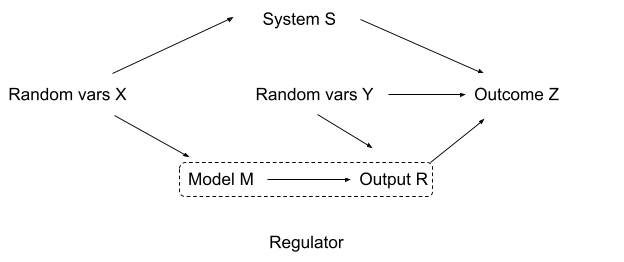

Any AI agent capable of multi-step goal-directed tasks must possess an accurate internal World Model through constructive proof that such models can be extracted from agent policies with error bounds. The more an agent learns (higher experience), the better it becomes at solving "deeper" goals, and we can reconstruct transition probabilities more accurately just by observing its policy. There is no "model-free" shortcut: The ability to achieve long-term and complex goals inherently requires learning an accurate world model. However, it is not necessary to explicitly define and train the world model, rather, defining good Next Token Prediction and appropriate implicit AI Incentive is sufficient.

www.arxiv.org

https://www.arxiv.org/pdf/2506.01622

World model Interpretability with Internal Interface Theory

If the way AI interacts with various modules through internal interfaces is consistently formed, the possibility increases that humans can understand the format of these interfaces and interpret the entire world model at once.

World-Model Interpretability Is All We Need — LessWrong

Summary, by sections: • 1. Perfect world-model interpretability seems both sufficient for robust alignment (via a decent variety of approaches) and…

https://www.lesswrong.com/posts/HaHcsrDSZ3ZC2b4fK/world-model-interpretability-is-all-we-need

Seonglae Cho

Seonglae Cho