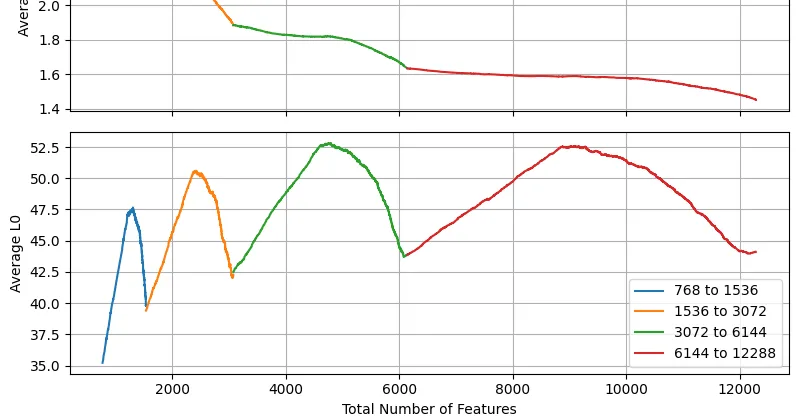

Exchanging latent features across different size of SAEs

Reconstruction latent (SAE Feature Splitting, SAE Feature Absorption)

If performance degrades or remains unchanged after adding it, that latent is judged to be a more detailed representation of latents already present in the smaller model, which we call a reconstruction latent

Latent Novel

A latent novel is identified when adding individual latents from a larger model to a smaller model improves reconstruction performance (e.g., MSE), indicating that these latents contain new information not present in the smaller model.

Stitching SAEs of different sizes — LessWrong

Work done in Neel Nanda’s stream of MATS 6.0, equal contribution by Bart Bussmann and Patrick Leask, Patrick Leask is concurrently a PhD candidate at…

https://www.lesswrong.com/posts/baJyjpktzmcmRfosq/stitching-saes-of-different-sizes

Stitching Sparse Autoencoders of Different Sizes

Sparse autoencoders (SAEs) are a promising method for decomposing the activations of language models into a learned dictionary of latents, the size of which is a key hyperparameter. However, the...

https://openreview.net/forum?id=VJ66JyKxgp&referrer=%5Bthe%20profile%20of%20Neel%20Nanda%5D(%2Fprofile%3Fid%3D~Neel_Nanda1)

Sparse Autoencoders Do Not Find Canonical Units of Analysis

A common goal of mechanistic interpretability is to decompose the activations of neural networks into features: interpretable properties of the input computed by the model. Sparse autoencoders...

https://arxiv.org/abs/2502.04878

Combined SAE and NMF to transform the model's internal representations into human-understandable units, making the (black box) diffusion model transparently manipulatable. Hundreds of SAE features were grouped using NMF into several high-level units (factors), combining the SAE Feature Splitting through NMF. In the equation , where V is the original SAE activation strength matrix, each row of H represents a high-level factor, and the values in that row represent the weights of the corresponding SAE features.

Painting With Concepts Using Diffusion Model Latents

We're launching Paint With Ember — a tool for generating and

editing

images by directly manipulating

the neural activations of AI models.

We're also open-sourcing the SAE model that

powers the app, and sharing our

findings on diffusion models and the features

they learn.

https://www.goodfire.ai/blog/painting-with-concepts

Seonglae Cho

Seonglae Cho