Hard to interpretable

Quadratic frequency loss

Removed them by quadratic frequency loss in batch

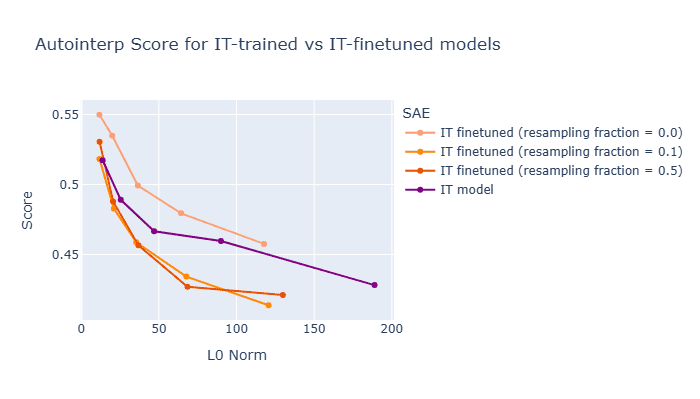

Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research (GDM Mech Interp Team Progress Update #2) — LessWrong

Lewis Smith*, Sen Rajamanoharan*, Arthur Conmy, Callum McDougall, Janos Kramar, Tom Lieberum, Rohin Shah, Neel Nanda • * = equal contribution …

https://www.lesswrong.com/posts/4uXCAJNuPKtKBsi28/negative-results-for-saes-on-downstream-tasks

Bias Adaptation

Group Bias Adaptation (GBA) adaptively adjusts neuron-specific biases to capture raw frequency characteristics by matching the Target Activation Frequency (TAF). For single-group BA, we mathematically proved that when data satisfies "sparse, non-cohesive, anti-coherence" conditions and neuron count, bias range, and learning rate requirements are met, all monosemantic features can be fully restored in O(1) iterations. As a result, we confirmed superior reconstruction loss, activation sparsity, and feature consistency compared to TopK and ℓ1 in LLMs with up to 1.5B parameters.

arxiv.org

https://arxiv.org/pdf/2506.14002

Or they just represent fundamentally dense signals in the model's activations (dark matter)

openreview.net

https://openreview.net/pdf?id=Zlx6AlEoB0

Seonglae Cho

Seonglae Cho