normalized version of all MSE numbers, where we divide by a baseline reconstruction error of always predicting the mean activations

- L0

- L1

- L2

- KL

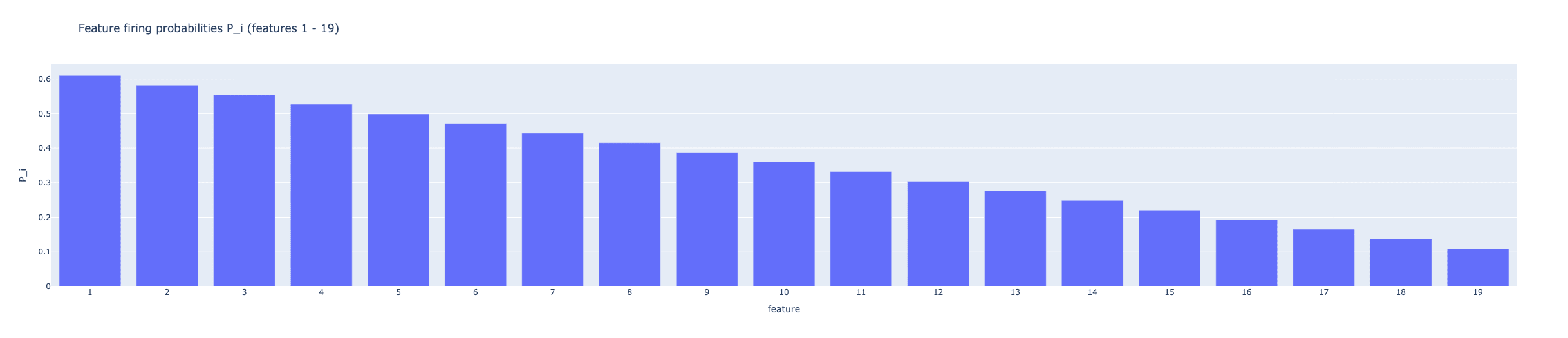

While L0 and L1 allow control of sparsity at the sample level, SAE High Frequency Latent features emerge because there's no pressure applied to the overall SAE Feature Distribution across all samples, resulting in many latents that are sparsely but frequently activated (5% of features activate more than 50% of the time). Therefore, if we develop approaches like BatchTopK SAE, we could provide incentives for different samples to use different features or penalize overlapping feature usage. Through independency loss or Contrastive Learning, we can achieve globally applicable sparsity.

FVU loss (Fraction Variance Unexplained)

The SAE objective is a tradeoff between sparsity and fidelity

arxiv.org

https://arxiv.org/pdf/2410.14670

Jumping Ahead: Improving Reconstruction Fidelity with JumpReLU Sparse Autoencoders

Sparse autoencoders (SAEs) are a promising unsupervised approach for identifying causally relevant and interpretable linear features in a language model’s (LM) activations.

To be useful for downstream tasks, SAEs need to decompose LM activations faithfully; yet to be interpretable the decomposition must be sparse – two objectives that are in tension.

In this paper, we introduce JumpReLU SAEs, which achieve state-of-the-art reconstruction fidelity at a given sparsity level on Gemma 2 9B activations, compared to other recent advances such as Gated and TopK SAEs.

We also show that this improvement does not come at the cost of interpretability through manual and automated interpretability studies.

JumpReLU SAEs are a simple modification of vanilla (ReLU) SAEs – where we replace the ReLU with a discontinuous JumpReLU activation function – and are similarly efficient to train and run.

By utilising straight-through-estimators (STEs) in a principled manner, we show how it is possible to train JumpReLU SAEs effectively despite the discontinuous JumpReLU function introduced in the SAE’s forward pass. Similarly, we use STEs to directly train L0 to be sparse, instead of training on proxies such as L1, avoiding problems like shrinkage.

https://arxiv.org/html/2407.14435v1

aclanthology.org

https://aclanthology.org/2024.blackboxnlp-1.19.pdf

Reconstruction dark matter within Dictionary Learning

A significant portion of SAE reconstruction error can be linearly predicted, but there exists a nonlinear error that does not decrease even when increasing the size. Therefore, additional techniques are needed to reduce nonlinear error.

arxiv.org

https://arxiv.org/pdf/2410.14670

Circuits Updates - July 2024

We report a number of developing ideas on the Anthropic interpretability team, which might be of interest to researchers working actively in this space. Some of these are emerging strands of research where we expect to publish more on in the coming months. Others are minor points we wish to share, since we're unlikely to ever write a paper about them.

https://transformer-circuits.pub/2024/july-update/index.html#dark-matter

While end-to-end training with KL divergence requires more computational resources, using KL divergence just for fine-tuning proves to be effective.

arxiv.org

https://arxiv.org/pdf/2503.17272

L0 is not neutral

L0 is not a neutral hyperparameter — LessWrong

When we train Sparse Autoencoders (SAEs), the sparsity of the SAE, called L0 (the number of latents that fire on average), is treated as an arbitrary…

https://www.lesswrong.com/posts/wjLmMoamcfdDwQB2y/l0-is-not-a-neutral-hyperparameter

Seonglae Cho

Seonglae Cho