CrossCoder (2024)

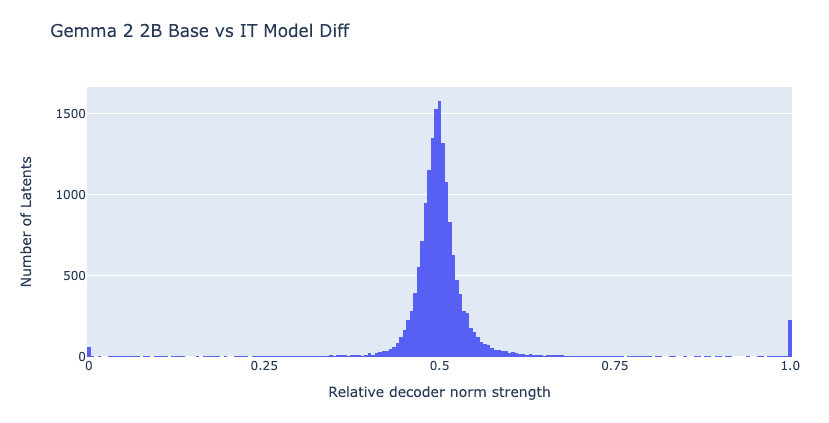

with Cross fine-tuning model & scaling transferability by diffing within same architecture

Sparse Crosscoders for Cross-Layer Features and Model Diffing

This note will cover some theoretical examples motivating crosscoders, and then present preliminary experiments applying them to cross-layer superposition and model diffing. We also briefly discuss the theory of how crosscoders might simplify circuit analysis, but leave results on this for a future update.

https://transformer-circuits.pub/2024/crosscoders/index.html

Open Source Replication of Anthropic’s Crosscoder paper for model-diffing — LessWrong

Intro Anthropic recently released an exciting mini-paper on crosscoders (Lindsey et al.). In this post, we open source a model-diffing crosscoder tra…

https://www.lesswrong.com/posts/srt6JXsRMtmqAJavD/open-source-replication-of-anthropic-s-crosscoder-paper-for

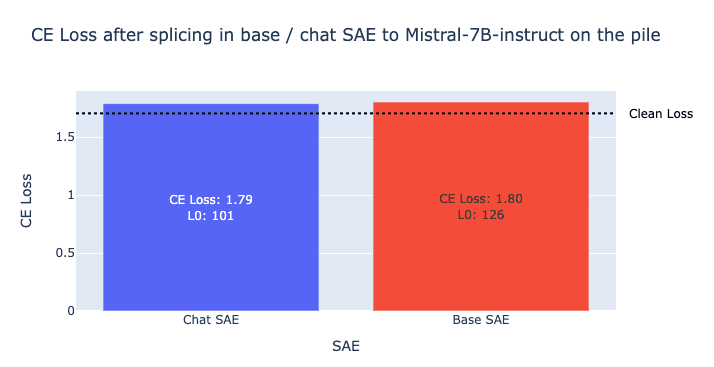

SAE Transferability

SAEs (usually) Transferability Between Base and Chat Models

SAEs (usually) Transfer Between Base and Chat Models — LessWrong

This is an interim report sharing preliminary results that we are currently building on. We hope this update will be useful to related research occur…

https://www.lesswrong.com/posts/fmwk6qxrpW8d4jvbd/saes-usually-transfer-between-base-and-chat-models

Transfer Learning across layers

By leveraging shared representations between adjacent layers, training costs and time can be significantly reduced by applying transfer learning instead of training Sparse AutoEncoder (SAE) from scratch. Backward was better than forward, which can be understood as starting with prior knowledge of computation results.

- forward SAE

- backward SAE

aclanthology.org

https://aclanthology.org/2024.blackboxnlp-1.32.pdf

Seonglae Cho

Seonglae Cho