SAE Unlearning Methods

Sparse Autoencoders for Improving Unlearning in Large Language Models

A.K.A: Smallish Large-Language Models: What Do They Know? Can They Un-Know Things?? Let’s Find Out.

https://www.kelechin.com/unlearning1

Limitation

SAE for unlearning concepts were not really helpful

Interventions aimed at removing specific knowledge led to performance degradation in domains unrelated to biology, and the loss itself increased in texts like openwebtext. Compared to negative scaling, clamping had fewer side effects and was more effective.

arxiv.org

https://arxiv.org/pdf/2410.19278

Impressive examples that fix LLM reasoning errors

Monitor: An AI-Driven Observability Interface

This write-up is a technical demonstration, which describes and evaluates the use of a new piece of technology. For technical demonstrations, we still run systematic experiments to test our findings, but do not run detailed ablations and controls. The claims are ones that we have tested and stand behind, but have not vetted as thoroughly as in our research reports.

https://transluce.org/observability-interface

Demo

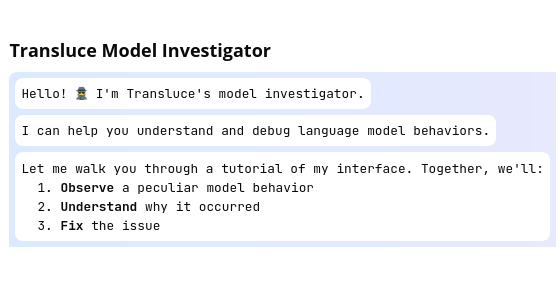

Transluce Monitor

https://monitor.transluce.org/dashboard/chat

Seonglae Cho

Seonglae Cho