Typically applied to the attention sink token or to the last tokens before generation

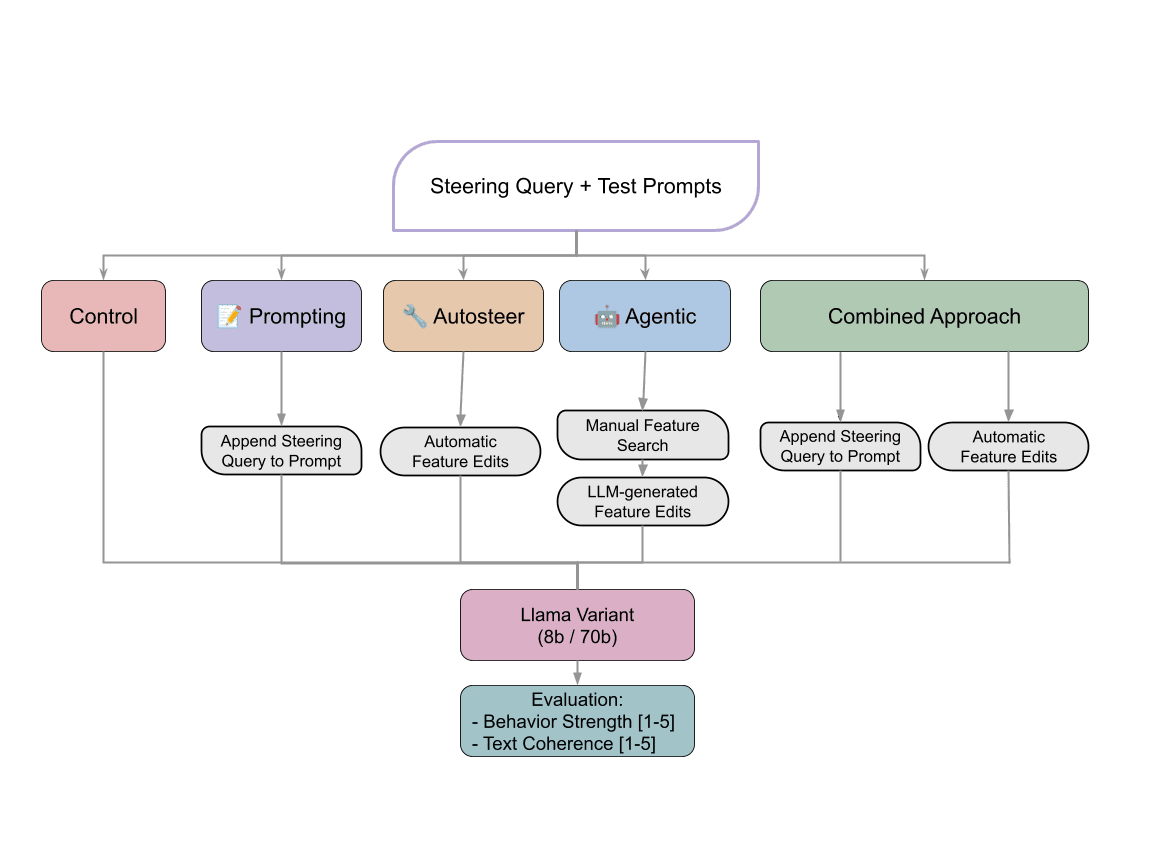

- How to find features

- How much steer those features

- Which token to steer those features

- How to apply those features

SAE Feature Steering Methods

GoodFire-Autosteer-EvaluationEitan-Sprejer • Updated 2025 Nov 22 23:32

GoodFire-Autosteer-Evaluation

Eitan-Sprejer • Updated 2025 Nov 22 23:32

GoodFire AI's AutoSteer automatically selects features that best distinguish between control and example datasets in the Dictionary. While this provides more direct and explainable behavior control than prompt manipulation alone, manual selection methods outperformed AutoSteer on Llama-70B in both behavior and consistency. Steering Vector Coefficient is stored in the Edit Set, optimizes activations, and uses l1 regression to automatically regularize simultaneously adjusted features. Evaluation was conducted using LLM-as-judge to assess both Behavior and Coherence.

Mind the Coherence Gap: Lessons from Steering Llama with Goodfire — LessWrong

TL;DR Context. Feature‑steering promises a cleaner, more interpretable way to shape LLM behavior than other methods, such as plain prompting. But doe…

https://www.lesswrong.com/posts/6dpKhtniqR3rnstnL/mind-the-coherence-gap-lessons-from-steering-llama-with-1

Feature searching

arxiv.org

https://arxiv.org/pdf/2505.20063v1

Impressive examples that fix LLM reasoning errors

Monitor: An AI-Driven Observability Interface

This write-up is a technical demonstration, which describes and evaluates the use of a new piece of technology. For technical demonstrations, we still run systematic experiments to test our findings, but do not run detailed ablations and controls. The claims are ones that we have tested and stand behind, but have not vetted as thoroughly as in our research reports.

https://transluce.org/observability-interface

Demo

Transluce Monitor

https://monitor.transluce.org/dashboard/chat

SAE shows lower monosemanticity compared to MLP layers and performs worse in steering compared to feedforward network ablation AxBench

arxiv.org

https://arxiv.org/pdf/2510.22332v1

Seonglae Cho

Seonglae Cho