All RLHF-like language model RL methods have this to prevent AI Reward Hacking.

Language Model RL uses sequence rewards (rewards for the complete answer), but actual training is done at the token level. It is basically a Contextual Bandit Model evaluating generated tokens in a given context, even though each token distribution's KL divergence makes them different. The two directions for resolving this problem in RL on LLMs are using a Reward model at a granular level or, more fundamentally, a World Model.

Humans do not consider all paths in parallel, nor do they give bonus points to every step of the process just because the answer was correct. They should regret intermediate steps that were strange and give extra points to those that were helpful. In other words, a reflection process that synthesizes multiple trials is also necessary.

- ORM (Outcome Reward Model): A method that gives rewards based only on the final output.

- PRM (Process Reward Model): A method that evaluates and rewards each step.

Language Model RL Methods

Language Model Reward Benchmarks

Reinforcement Learning Transformers

Language Model RL Frameworks

Pre-training, mid-training, and RL post-training each contribute to "reasoning capability" in different ways, which can be causally decomposed in a fully controlled (synthetic) setting. The approach uses DAG to create reasoning structures and templates (e.g., animal-zoo, teacher-school) to vary only surface context. A process-verified evaluation is used by parsing the model's output CoT and comparing the predicted graph vs. the ground truth graph.

Generalization type

- Extrapolative (Depth): Can the model solve problems when the number of operations (reasoning steps) goes deeper than the training range?

- Contextual (Breadth): Does the same reasoning structure transfer when surface context changes?

The conditions under which RL produces 'true capability gain' are narrow. For in-distribution (ID) problems already well-covered by pre-training, RL increases pass@1 but barely improves pass@128 → this is closer to "sharpening" existing capabilities. Conversely, when pre-training leaves headroom and RL data targets the edge of competence (where pass@1 fails but pass@k shows some success), substantial expansion occurs with pass@128 improvements even on OOD. RL on problems that are too easy (ID) or too hard (complete OOD-hard) doesn't train well. In other words, easy problems get sharpened and difficult problems gain capability.

Contextual generalization requires a 'minimal seed'. For new long-tail context (B), if pre-training exposure is 0%~0.1%, RL also struggles to transfer. However, if context B is included in pre-training even sparsely (e.g., ≥1%) at the atomic primitive level, RL amplifies that seed to create strong transfer (large pass@128 gains). RL struggles to create something from nothing but excels at scaling up small foundations (seeds).

Mid-training is the 'bridge' that significantly affects RL efficiency. When varying the ratio (β) between mid-training and RL with the same compute budget, characteristics diverge: OOD-edge (moderately difficult domain) performance (especially pass@1) is better when mid-training proportion is high and RL is light. OOD-hard (much harder domain) generalization improves when RL proportion is increased. Mid-training lays down priors/representations; RL expands exploration and composition on top of that.

PRM reduce AI Reward Hacking and improve performance. Using only outcome rewards (correctness) easily leads to "shortcuts/dishonest reasoning that just gets the answer right." Mixing in dense rewards based on process verification (α mix), or only giving outcome rewards when the process is correct, reduces structural errors (dependency mismatches, etc.) and improves pass@1 and some pass@128 on OOD.

arxiv.org

https://arxiv.org/pdf/2512.07783

Era of Experience

storage.googleapis.com

https://storage.googleapis.com/deepmind-media/Era-of-Experience%20/The%20Era%20of%20Experience%20Paper.pdf

However, it has low Sample efficiency in larger samples and only finds better reasoning paths within its existing capacity which makes its total problem solving coverage smaller

www.arxiv.org

https://www.arxiv.org/pdf/2504.13837

SFT Memorizes, RL Generalizes (, Model Generalization, )

While the provocative title is not exactly correct, it provides insight even for Multimodality

SFT Memorizes, RL Generalizes

SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training

https://tianzhechu.com/SFTvsRL/

Fine-tuning 20B LLMs with RLHF on a 24GB consumer GPU

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/blog/trl-peft

How to Fine-Tune LLMs in 2024 with Hugging Face

In this blog post you will learn how to fine-tune LLMs using Hugging Face TRL, Transformers and Datasets in 2024. We will fine-tune a LLM on a text to SQL dataset.

https://www.philschmid.de/fine-tune-llms-in-2024-with-trl

Agent RL vulnerability

Search LLMs trained with agentic RL may appear safe, but can be easily jailbroken by manipulating the timing of the search step. The RL objective itself fails to suppress harmful queries, making "search first" behavior a critical vulnerability.

arxiv.org

https://arxiv.org/pdf/2510.17431

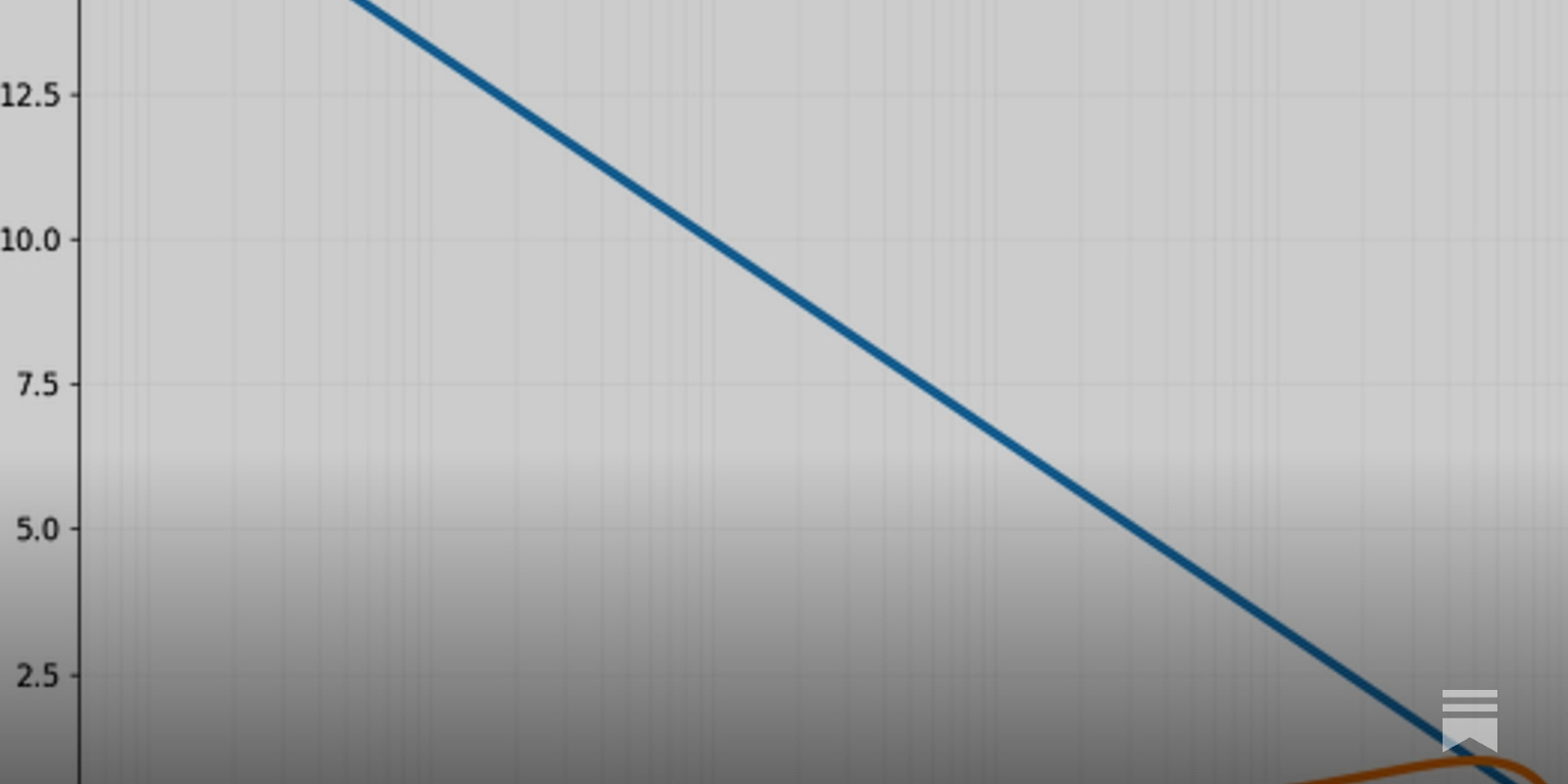

Information density (bits/sample) is very low in early training. Sample efficiency Supervised learning provides the correct answer for every token, always obtaining a lot of information, but RL only has high information when the probability of a correct answer is around 50%. In the early stages of RL, there are almost no correct samples, leading to extreme gradient variance.

Thinking through how pretraining vs RL learn

And implications for RLVR progress

https://www.dwarkesh.com/p/bits-per-sample

Seonglae Cho

Seonglae Cho