Scaling context-size can flexibly re-organize model representations, possibly unlocking novel capabilities. This multi-faceted examination of LLM capabilities suggests that LLMs can achieve scaling through Language Model Context scaling and LM Context Extending, demonstrating that this scaling improves connectivity and reconstruction capabilities between concepts in in-context learning and representation.

While long-context LLMs provide simplicity, their ability to accurately recall factual information can diminish. In other words, the attention mechanism struggles to manage complexity when it scales.

Language Model Context Extending Methods

arxiv.org

https://arxiv.org/pdf/2309.16039.pdf

Paper page - Extending Context Window of Large Language Models via Positional Interpolation

Join the discussion on this paper page

https://huggingface.co/papers/2306.15595

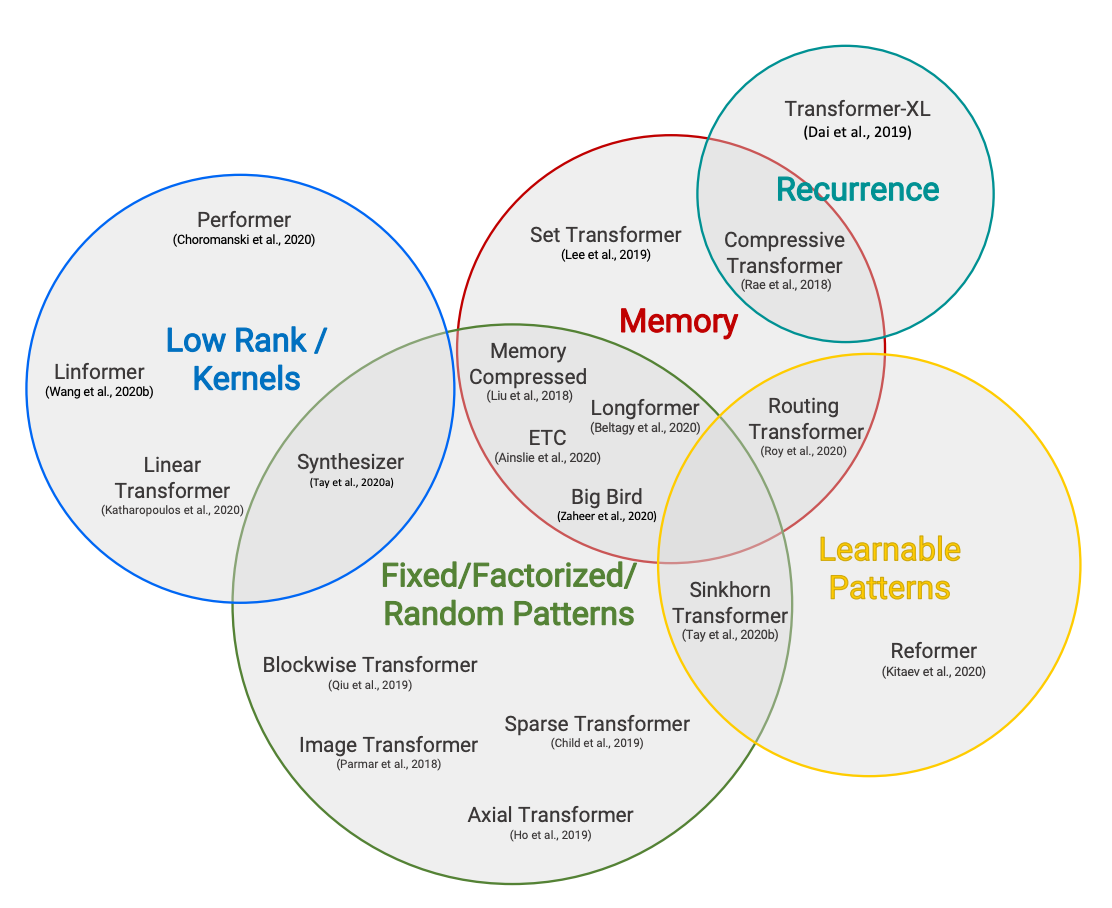

Hugging Face Reads, Feb. 2021 - Long-range Transformers

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/blog/long-range-transformers

Seonglae Cho

Seonglae Cho