Window attention, where only the most recent KVs are cached, is a natural approach

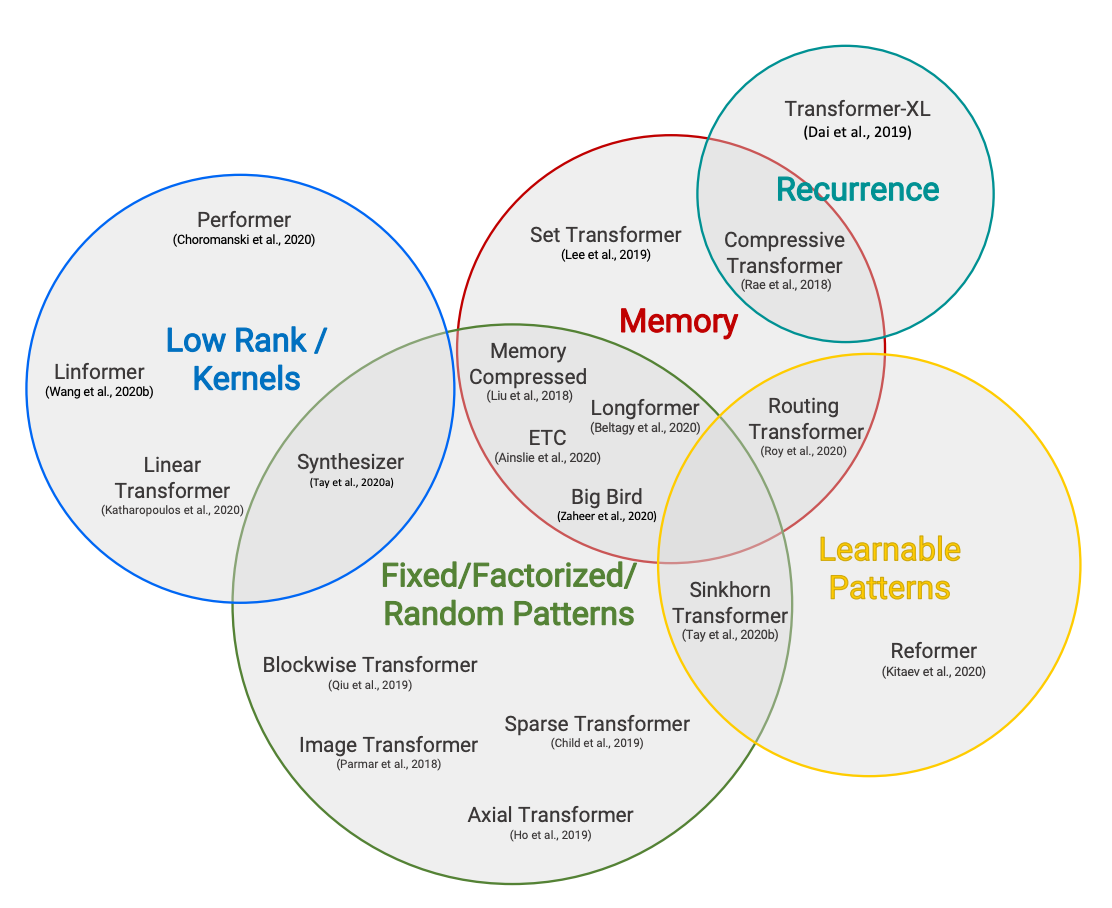

Sparse Attentions

Hugging Face Reads, Feb. 2021 - Long-range Transformers

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

https://huggingface.co/blog/long-range-transformers

LLMs May Not Need Dense Self Attention

Sink Tokens and the Sparsity of Attention Scores in Transformer Models

https://medium.com/@buildingblocks/llms-may-not-need-dense-self-attention-1fa3bf47522e

Seonglae Cho

Seonglae Cho